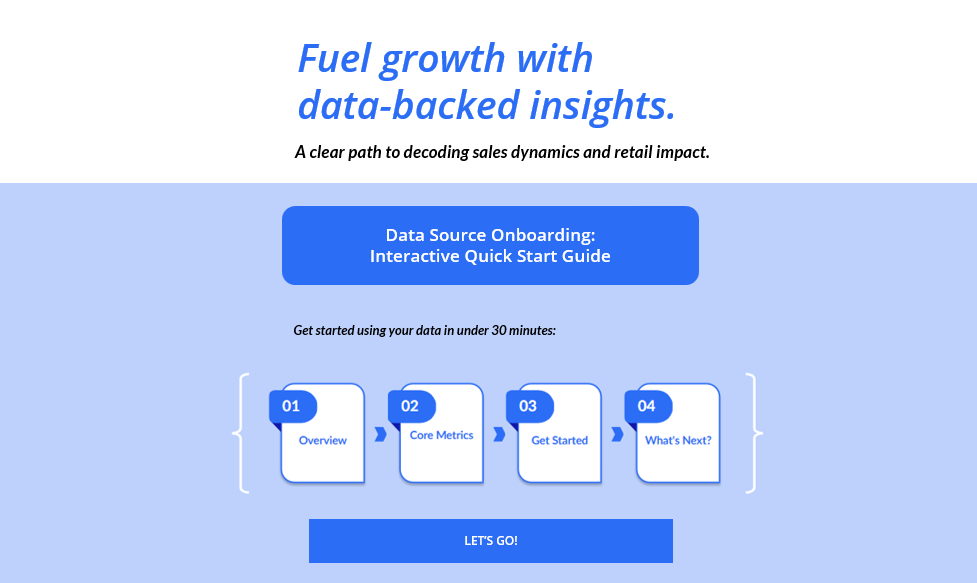

Data Source Onboarding: Quick Start for New Users

Get up to speed quickly on the essential data knowledge needed to build a basic report.

Project Overview

I designed and developed a Quick Start Learning Program to onboard new users of a specific data source. Many learners were unfamiliar with the underlying metrics and struggled to interpret data within the reporting platform. This program provided a clear, accessible entry point by combining an interactive eLearning module, a concise how‑to video, and supporting job aids. The experience was intentionally digital‑first and scalable, giving learners immediate confidence with foundational concepts and guiding them through building a basic report step‑by‑step.

Audience

Customers new to the data source and reporting platform

Tools Used

Articulate Rise, ScreenPal, Contentful, Microsoft Word, PowerPoint, Asana

Scoped and sequenced tasks across ADDIE phases using Asana.

Conducted analysis using existing documentation, SME insights, and learner feedback.

Designed clear, foundational learning objectives aligned to Bloom’s Taxonomy.

Built the Quick Start module in Articulate Rise with layered interactivity and guided practice.

Produced a how‑to video in ScreenPal to model key workflows.

Created job aids and reference articles, then translated them into Contentful Markdown.

Coordinated LMS and Contentful publishing workflows for a seamless launch.

Monitored early engagement and learner confidence to inform future enhancements.

Responsibilities

Note: Original materials cannot be shared due to company confidentiality. Visuals included are masked.

New users struggled to understand core measurement concepts and apply them effectively within the reporting platform. Existing documentation was dense, fragmented, and difficult to navigate, making onboarding slow and overwhelming. Learners lacked confidence in interpreting foundational metrics and had no streamlined entry‑level learning path to bridge the gap between technical documentation and more advanced analytics training.

The Challenge

I scoped and delivered a Quick Start Learning Program designed to give new users immediate confidence with the reporting platform. The program introduced essential concepts, emphasized the most critical metrics, and guided learners through building a basic report using clear, step‑by‑step instruction. By combining interactive eLearning, a concise video tutorial, and supporting job aids, the experience offered multiple reinforcement points and served as the first stage in a larger learning journey

The Solution

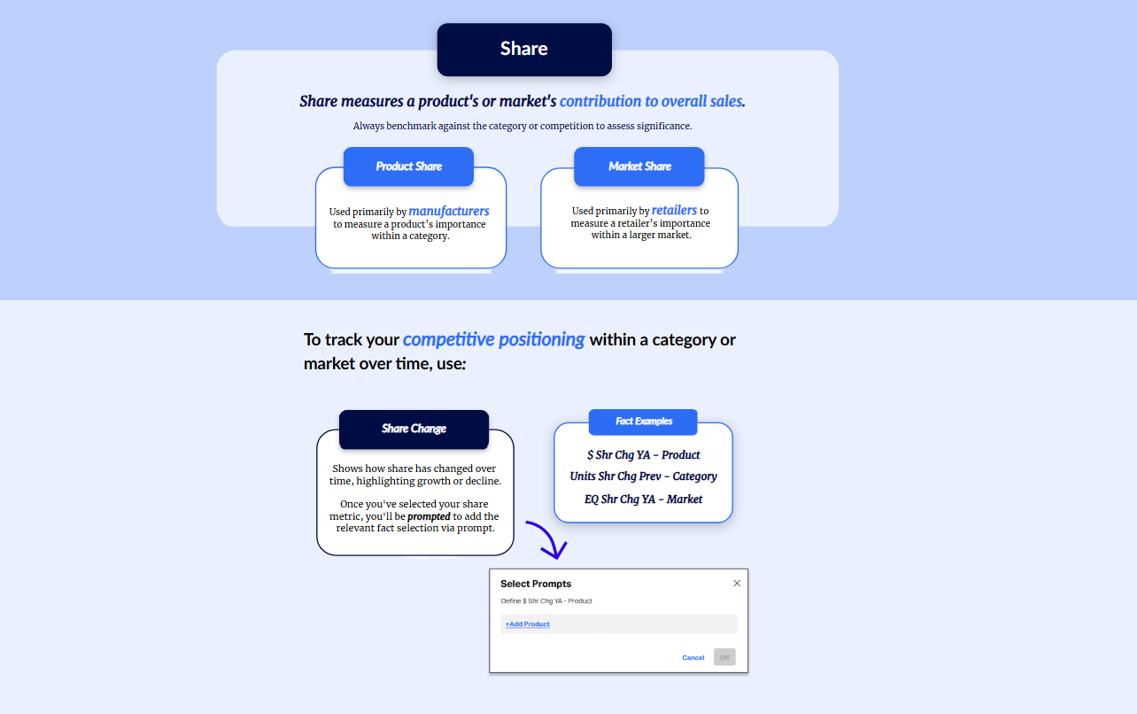

Core Metrics Visuals (Rise)

My Process

Learning Model and Theory Integration

This project was grounded in:

Bloom’s Taxonomy — to define clear, foundational learning objectives.

Gagné’s Nine Events of Instruction — to structure the learning flow and support engagement.

Adult Learning Principles — to ensure relevance, clarity, and immediate application.

These models guided decisions across each ADDIE phase to ensure the program was accessible, confidence‑building, and aligned with real‑world reporting tasks.

-

I drew on several years of experience designing and maintaining training and documentation for this data source, giving me deep insight into user pain points and workflows. I reviewed stakeholder insights and existing materials to identify redundancies and gaps, and collaborated with SMEs to clarify business use cases and reporting requirements. This ensured the program was grounded in real‑world challenges and aligned with customer expectations.

Gagné Integration: Stimulated recall by connecting new content to familiar reporting challenges and prior resources.

-

Defined clear learning objectives aligned to Bloom’s Taxonomy (Remember → Understand → Apply).

Structured the program into modular segments: Overview, Core Metrics, Get Started, What’s Next.

Applied Gagné’s Nine Events to gain attention, set expectations, and provide learning guidance.

Designed real‑world metric explanations and guided report‑building steps.

Positioned higher‑order Bloom’s objectives for future modules in the learning journey.

Gagné Integration:

Informed learners of objectives.

Gained attention through bold visuals and real‑world scenarios.

Provided learning guidance with embedded tips and walkthroughs.

-

Built the Quick Start module in Articulate Rise with layered interactivity and scenario‑based examples.

Produced a concise how‑to video in ScreenPal to model key workflows.

Created job aids and reference articles, then translated them into Contentful Markdown for accessibility.

Integrated knowledge checks with immediate feedback to reinforce learning.

Designed guided practice activities where learners built a basic report step‑by‑step.

Gagné Integration:

Presented content through concise visuals and examples.

Elicited performance through guided practice.

Provided feedback via knowledge checks.

-

Implementation focused on a coordinated, seamless launch. I created SCORM packages for the Rise module and published them to the LMS, while the developer published reference articles to Contentful. I managed timelines, aligned deliverables, and facilitated stakeholder communication to ensure the eLearning module and job aids launched simultaneously across platforms.

Gagné Integration: Enhanced retention and transfer by ensuring ongoing access to job aids and reference articles.

-

I embedded mechanisms to measure effectiveness and inform future iterations. Knowledge checks provided immediate feedback and tracked comprehension, while post‑launch evaluation strategies captured learner satisfaction, completion rates, and confidence gains. Engagement metrics continue to be monitored to identify strengths and opportunities for improvement. As more data is collected, I will assess key takeaways and share recommendations for scaling the program.

Gagné Integration: Assessed performance through knowledge checks and self‑assessment prompts.

Reflection: Project Takeaways

Key Learnings

Incorporate a wider range of scenarios to reflect diverse customer contexts.

The initial Quick Start focused on a single, representative reporting example. Future iterations could include optional scenario variations tailored to different industries or use cases, helping learners see themselves more directly in the content and increasing transferability.

This project reinforced the value of designing clear, foundational learning experiences that reduce cognitive load and build early confidence. The modular structure, layered interactivity, and guided practice helped learners bridge the gap between dense documentation and real‑world reporting tasks. The digital‑first approach also proved highly scalable, supporting consistent onboarding across customer teams.